Why Is HMaster Crucial for HBase Metadata and Load Balancing?

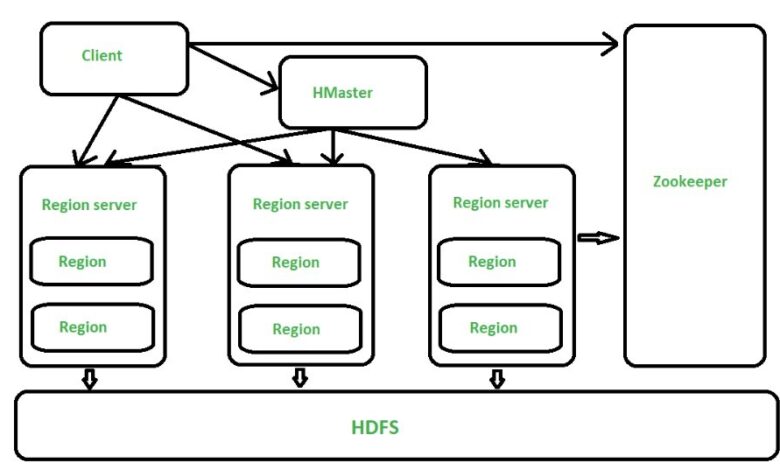

HBase’s HMaster is critical for coordinating metadata and load management in a distributed HBase environment. As the master server, HMaster’s responsibilities include allocating regions to region servers, storing metadata, and providing a stable environment for data access and change. By centralizing these tasks, HMaster guarantees that HBase remains a dependable platform for high-availability data applications, especially in scenarios requiring smooth data distribution across numerous servers. Its ability to supervise the whole cluster aids in efficient operations and is critical in data organizing and resource balancing for peak performance.

What Makes HMaster Critical in HBase?

Managing Metadata Efficiently

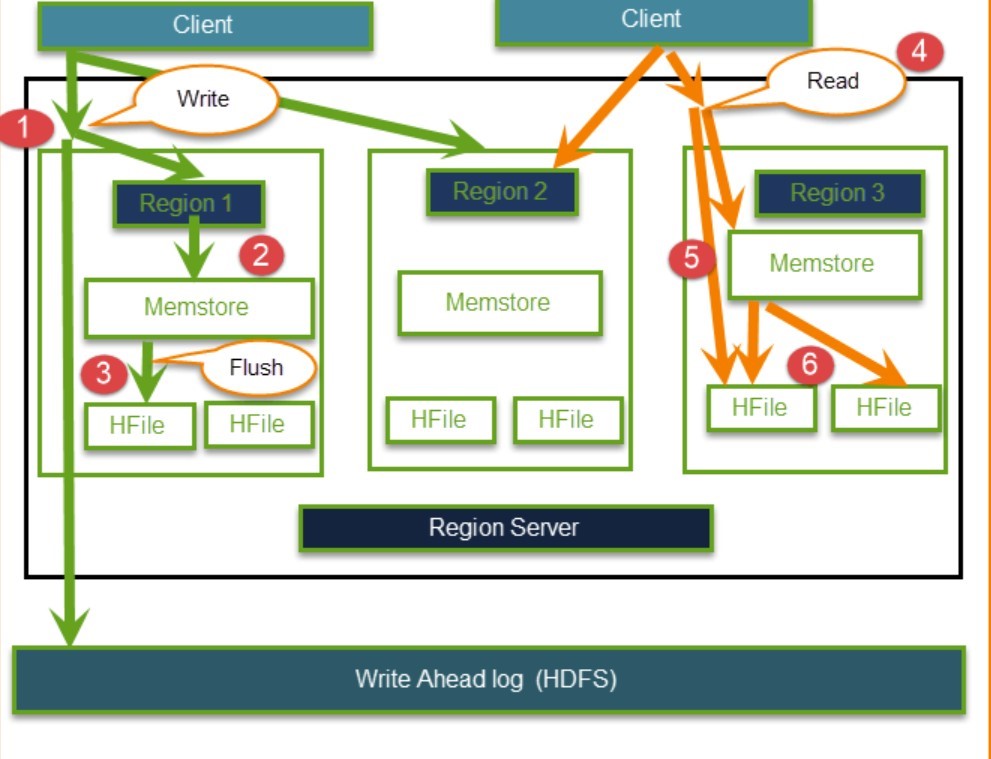

Metadata management in HBase, centralization of important operations like adding, editing, and removing tables, and schema adjustments are all made possible by HMaster. The accuracy and currency of the data structures across the HBase cluster are guaranteed by HMaster’s supervision of these metadata operations. Thanks to this consolidated method, the system is more efficient, and HBase can provide precise and quick data retrieval. Supporting robust and scalable data operations, HMaster manages metadata so clients may access structured data smoothly. Because of this, HBase offers an even more solid foundation for apps that need to manage and organize massive amounts of data.

Overseeing Region Servers

Overseeing the HBase cluster’s region servers is a key function of HMaster. Upon starting, it distributes regions to servers according to their capacity, ensuring that loads are balanced. It then continuously analyzes its operations to ensure they are running at peak performance. To keep the workload distribution uniform throughout the cluster, HMaster keeps a close eye on these servers and quickly reassigns areas when one of them gets overloaded. Because of this carelessness, bottlenecks are less likely to occur, which boosts the system’s responsiveness and makes it easier for HBase to process massive datasets. HBase relies on this feature to operate reliably in dispersed situations.

Optimizing Data Distribution

For optimal data access performance, HMaster balances the workload of each server in the area, which optimizes data distribution. It distributes areas in a manner that enhances access speed by constantly monitoring and adjusting to prevent any one server from getting overloaded. Fast data access for users and reduced latency concerns are ensured by HBase’s balanced data placement, which enables it to run effectively even under heavy demand. With HMaster in charge of data allocation, HBase can extend horizontally without sacrificing efficiency, accessibility, or organization in its data system.

How Does HMaster Handle Load Balancing in HBase?

Balancing Region Servers Under Load

HMaster keeps a close eye on the demand on each server in each area; if one server starts to become overloaded, it reassigns its regions to keep things balanced. In order to keep the system running efficiently even while dealing with massive amounts of data, HMaster constantly adjusts the load among the servers. Maintaining excellent performance levels even during peak use times is made possible by this continual balancing act in HBase. For reliable data access and to ensure that no one region server can bring down the whole HBase system, load balancing is a must.

Redistributing Data for Optimal Access

Whenever it detects an imbalance, HMaster redistributes data around area servers in order to keep data access optimum. To improve access speeds and decrease latency, HMaster redistributes data to underused servers when areas are unevenly filled. No matter how high the demand, our data redistribution method will keep consumers’ experiences as smooth as possible. By reliably controlling where data is stored, HMaster gives HBase the leeway to adapt to demand spikes without sacrificing accessibility. Applications that need fast data access and adaptive load management will find HBase to be a dependable option.

Monitoring System Health and Performance

HMaster keeps a close eye on all the parts of the HBase cluster in real-time, checking in on the region servers and more to catch problems before they escalate. In order to prevent issues from affecting the cluster’s efficiency, HMaster monitors metrics across servers to determine their performance and load. In order to keep the HBase system running well, it is essential that HMaster keeps a close eye on things to make sure data availability is high and users don’t have any problems. For all HBase applications, HMaster ensures a reliable and efficient data environment by continuously monitoring system health.

What Happens if HMaster Fails?

Automatic Failover Mechanism

There will be zero downtime caused by HBase’s automated failover method if HMaster fails. This situation guarantees that region assignment and metadata maintenance continue uninterrupted by allowing a backup HMaster to transition into the role of active master. This seamless transition ensures that data activities are kept running smoothly and that performance is not negatively affected. Users may be certain that HBase will continue to work reliably even in the face of unforeseen disruptions thanks to the automated failover. The high availability of HBase is dependent on this technique, which is critical for applications that need constant access to data.

Restoring Load Balancing with Backup HMaster

The backup HMaster takes over load-balancing duties immediately in the event that the current HMaster fails. To restore a more balanced distribution of workloads, the backup checks how each region’s servers are handling the current load and makes modifications as needed. The backup HMaster keeps data access efficient by rapidly re-establishing load balancing, which reduces any possible performance concerns. This lightning-fast reaction is crucial for keeping HBase stable; it avoids long-term imbalances and makes sure the system keeps performing well and reliably even if HMaster fails.

Data Integrity in Failover Situations

By working along with ZooKeeper, HBase ensures that data remains intact after an HMaster failover. Protecting the structure and dependability of the data, ZooKeeper makes sure that metadata and schema are consistent throughout the cluster once the backup HMaster takes over. Data integrity is protected by this coordination, so users won’t have to worry about data loss or inconsistent access. Even during failover situations, HBase is able to keep running reliably and without interruption because of the smooth transition handled by ZooKeeper and HMaster. Users whose large-scale data applications rely on constant data access and correctness will find this dependability to be an essential component.

Conclusion

For metadata management, server load balancing, and cluster health oversight, HMaster’s function in HBase architecture is critical. The needs of distributed data management are met by HMaster’s stable and dependable environment, which is created by allocating regions, monitoring server performance, and managing automated failover. Through its comprehensive functionalities, hmaster enhances HBase’s efficiency and scalability, making it a robust solution for large data-driven applications. With HMaster’s data consistency and operational continuity features, HBase becomes even more attractive for applications that need scalable, high-performing data solutions.